At this year’s Regional ESRI Users Group conference in Whangarei, I presented Vision’s work on applying land change modelling with machine learning software to predict where future subdivisions may occur in the Bay of Islands. This is going to be a long post; so for those wanting to skip strait to the results you can have a play with the following slides. Clicking between the numbers at the top, you can see the transition between:

- Parcel Size (m²) in 2003,

- Parcel Size (m²) in 2018,

- Projected Parcel Size (m²) in 2038 (Inclusive Model Scenario), and

- Projected Parcel Size (m²) in 2038 (Exclusive Model Scenario)

[smartslider3 slider=3]

For those wanting to know more, I should first outline how this model is different from typical land change modelling. Land change models are commonly applied to changes in physical characteristics of a geographic area. This can be the land cover (eg forest converting to plains) or land use (farms land to residential). Our project is trying to measure and predict a non-physical attribute, legal boundaries. More Specifically, the transition of larger parcel size to smaller parcels. Our study area covers the Bay of Islands change from 2003 to 2018, and project the new subdivisions out to 2038.

We used machine learning software, developed by Clark Labs, to perform the raster analysis. The process has four primary stages:

- Data Preparation

- Measuring change

- Modelling Susceptibility

- Predictive Modelling

Stage 1. Data Preparation

I won’t spend much time reliving this stage, partially because its boring, mostly because it turned out to be an arduous process that took about 50% of the time dedicated to the project. What to know:

- All data was converted to a raster format;

- To work in the software, all data was converted to share the same CRS, extent, and grid size;

- This project analyzed subdivision or primary parcels (not land titles);

- Only parcels which were privately owned were retained for the model; and

- I did not have access to a historic data set, so the historic (2003) parcels were approximated using a reductive model I built in ArcGIS. While this will likely introduce some level of error, about 96% of parcels were able to be filtered.

Stage 2. Measuring Change

After all the data is created and imported, the first step for modelling land change is to find the change which happened over the base period (from 2003 to 2018). The subdivision being measured over this period was set at four different thresholds:

- 5,000 m²

- 10,000 m²

- 20,000 m²

- 40,000 m²

The two layers are compared, and any change is extracted. These areas are what will be used in the later stages to determine the strength of correlation between the change observed and the driver variables. The net change by area and where the change occurred are shown in the table and animation below. Net change indicates how much new area for each category was gained or lost between 2003 and 2018.

| Table 1. Net Transition by Parcel Threshold from 2003 to 2018 |

| Threshold | Cells | Hectares |

| Sub 5,000 m² | 44,665 | 285 |

| Sub 10,000 m² | 29,393 | 188 |

| Sub 20,000 m² | 46,783 | 299 |

| Sub 40,000 m² | 104,714 | 671 |

| Over 40,000 m² | -227,583 | -1,456 |

As you can see, land in the Bay of Island area has been shifting to smaller parcels with subdivision activity being strongest in the Kerikeri area.

Stage 3. Modelling Susceptibility

This is the point where we get to the real meat of the process. In short the software uses Machine Learning Algorithms, more specifically Artificial Neural Network, to:

- Take a Sample of 20,000 cells (50% transition, 50% persistence),

- Combine the sample with the driver layers to evaluate and weight susceptibility criteria, and

- perform 5,000 iterations of each model.

The change layer you have already seen in in Stage 2. What is important to note here, the model is trying to understand two things. First is transition, or where over the base period cells change. Second is persistence, or where over the base period cells did not change. Both are equally important to understanding how subdivision may look in the future.

When the transition and persistence sample is taken, the model begins comparing where change did and did not happen with the driver layers. These include:

- Distance from:

- Primary Roads,

- Secondary Roads,

- Waste Water Lines,

- Urban/Town Land cover (Anthropogenic Disturbances),

- Coast,

- Kerikeri (Township), and

- Coverage by:

- Slope,

- Elevation,

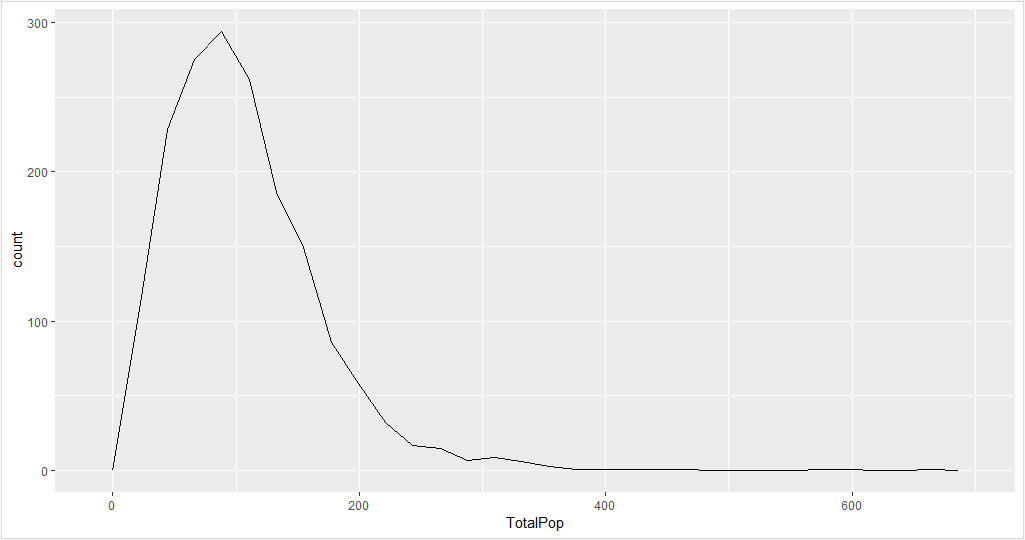

- Population Density,

- Land Cover Data Base, and

- District Plan Zoning.

[smartslider3 slider=2]

This process was performed for each parcel size threshold in two different scenarios. The first scenario was Inclusive. This means that all parcels beneath the size threshold are included in each model (eg. Sub 20k includes all parcels 20,000 m² and under). In this scenario we would have to assume that there is not much difference in how the driver variable act on the different size parcel.

The second scenario was Exclusive. This means that only parcels between the size threshold are included in each model (eg. Sub 20k only includes all parcels between 10,000 m² and 20,000 m²). In this scenario we are assuming that the driver variable act differently on parcels of different sizes.

The results for each model are shown in Table 2. and Table 3. The model accuracy is based on a blind sample in the model. After the processing of weighting and calibrating the driver variables against change and persistence, the software predicts where change or persistence will happen against an unknown sample. The percentage is how many times the prediction was correct. The skill measure beneath accuracy indicates what the model was better at predicting (higher being better). Lastly, the number in the column shows the driver variable’s rank in terms of how much influence it had on predicting transition and persistence.

| Table 2. Inclusive Model Results |

| Parameters | Sub 5K | Sub 10K | Sub 20K | Sub 40K |

| Model Accuracy | 89.2% | 84.4% | 83.9% | 81.7% |

| Transition Skill | 0.7487 | 0.6850 | 0.7432 | 0.7272 |

| Persistence Skill | 0.8172 | 0.6900 | 0.6138 | 0.5394 |

| Distance from: | Town | 5 | 11 | 8 | 5 |

Anthro-

pogenic | 2 | 9 | 1 | 1 |

| Coast | 9 | 5 | 5 | 4 |

| Waste Water | 6 | 10 | 6 | 7 |

| Roads Primary | 4 | 2 | 3 | 3 |

| Roads Secondary | 1 | 1 | 2 | 2 |

| Cover by: | Slope | 10 | 7 | 11 | 9 |

| Zoning | 8 | 8 | 6 | 7 |

| Elevation | 7 | 4 | 7 | 8 |

| LCDB | 11 | 6 | 10 | 11 |

| Pop Density | 3 | 3 | 9 | 10 |

| Table 3. Exclusive Model Results |

| Parameters | Sub 5K | Sub 10K | Sub 20K | Sub 40K |

| Model Accuracy | 89.1% | 84.1% | 84.3% | 81.0% |

| Transition Skill | 0.7631 | 0.7303 | 0.8142 | 0.7079 |

| Persistence Skill | 0.8019 | 0.6353 | 0.5566 | 0.5321 |

| Distance from: | Town | 5 | 3 | 8 | 8 |

Anthro-

pogenic | 1 | 1 | 1 | 1 |

| Coast | 9 | 6 | 5 | 4 |

| Waste Water | 6 | 4 | 3 | 7 |

| Roads Primary | 2 | 2 | 2 | 2 |

| Roads Secondary | 4 | 8 | 4 | 3 |

| Cover by: | Slope | 11 | 11 | 10 | 9 |

| Zoning | 8 | 7 | 7 | 6 |

| Elevation | 7 | 10 | 6 | 5 |

| LCDB | 10 | 5 | 9 | 10 |

| Pop Density | 3 | 9 | 11 | 11 |

Now that the model has figured out the weighting for each driver layer, they are combined into a layer that shows the susceptibility to transition for each size threshold. The heat maps bellow shows the results at each threshold. Dark red shows the areas with the highest susceptibility (the combination of drivers where most subdivision occurred) while blue shows area of low susceptibility (the combination of of drivers where little to no subdivision occurred).

[smartslider3 slider=4]

Comparing the results of the two scenarios, indicates that drivers may not act the same at all subdivision thresholds. In the result table for the inclusive model, you can see that the most and least influential drivers are less consistent than in the table for the exclusive model. You can also see the variation in the resulting heatmaps, where the exclusive model shows a wider spread of susceptibility for the larger parcel thresholds.

Stage 4. Predicting Future Subdivision

With the susceptibility heat maps generated, the last stage of the process can be completed. The projection uses a variation of Cellular Automata by:

- Taking the historic rate of change, meaning the observed number of cells (land area) that changed annually between 2003 and 2018,

- projecting the rate of change forward to the year 2038 (year chosen by me), and

- flipping (subdivide) the cells, prioritizing those with the highest susceptibility score and adjacent to or joining areas of prior subdivision activity.

This process assumes that:

- past drivers of change will maintain the same amount of influence on future subdivision,

- subdivision will happen at a similar rate to historic change, and

- areas of recent subdivision activity will influence future activity.

The scenarios were modeled with five year outputs from 2018 to 2038. The results are shown in the two slideshows below.

[smartslider3 slider=5]

[smartslider3 slider=6]

So What?

We are finally to the end of this long post and you may be wonder; “So what have we learned?”. Off the bat, it appears that land use change models operate reasonably well with non-physical land cover attributes. Though that may not excite most people, I’m already making a wish list of next projects.

More generally, we gained insights about subdivision trends in the Bay of Islands. First, we know the models had better accuracy for subdivision of smaller parcels, meaning the driver variable chosen had a stronger influence on predicting the outcomes; and additional/different drivers may need to be identified for larger parcel subdivision models.

Second, we know that there was a variation between the inclusive and exclusive model results, with the exclusive model being more stable. This indicates that other research related to subdivision may need to account for differences in parcel size.

Third, the accuracy of models drop as the parcel size gets large, and the drop is mostly attributed to lower persistence scores. This could mean (with the variables given) that knowing where the “holdouts” will be is harder to assess, and could be the topic of continued research.

Lastly, we now have layers that show where subdivision has occurred in the past and areas are most susceptible for subdivision in the future. Interestingly, the “hottest” areas form a V facing out from Kerikeri; with one arm reaching out through Waipapa/Kapiro and the other arm following Kerikeir Road towards Waimate North. I had a look at various layers and found that this formation largely followed the Kerikeri Irrigation scheme. Were I to refine this model, that would likely be a driver layer I’d test.

We could take the results further and start comparing them to layers associated with District and Regional Planning. For instance, we could see where zoning may need to be augmented to promote or restrict growth, and where potential areas of reverse sensitivity may be developing. The Land Use Capability layer for soils could be added, and the amount of highly versatile soils at high risk of subdivision could be assessed. Or we could see what habitats may be at risk of human encroachment.

There is also the option to add nuances to the model such as:

- testing new variables,

- incorporating roading development tool for dynamic (modeled) or scheduled (already planned) road generation, or

- using the “Incentive and Constraint” layer tool (eg. new zoning, Urban Growth Boundaries, or new conservation areas)

I’ll close by saying this project was only a first blush at modelling subdivision. There is still calibration and validation that should be performed before viewing the results as conclusive. However, it does serve as interesting starting point for understanding the future of land use in the Bay or Islands.

-Logan Ahmore